Principal component analysis of a trajectory

Here we compute the principal component analysis of a trajectory.

Last updated: December 2022 with MDAnalysis 2.4.0-dev0

Minimum version of MDAnalysis: 1.0.0

Packages required:

Optional packages for visualisation:

Throughout this tutorial we will include cells for visualising Universes with the NGLView library. However, these will be commented out, and we will show the expected images generated instead of the interactive widgets.

[1]:

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

%matplotlib inline

import MDAnalysis as mda

from MDAnalysis.tests.datafiles import PSF, DCD

from MDAnalysis.analysis import pca, align

# import nglview as nv

import warnings

# suppress some MDAnalysis warnings about writing PDB files

warnings.filterwarnings('ignore')

Loading files

The test files we will be working with here feature adenylate kinase (AdK), a phosophotransferase enzyme. ([BDPW09]) The trajectory DCD samples a transition from a closed to an open conformation.

[2]:

u = mda.Universe(PSF, DCD)

Principal component analysis

Principal component analysis is a common linear dimensionality reduction technique that maps the coordinates in each frame of your trajectory to a linear combination of orthogonal vectors. The vectors are called principal components, and they are ordered such that the first principal component accounts for the most variance in the original data (i.e. the largest uncorrelated motion in your trajectory), and each successive component accounts for less and less variance. The frame-by-frame conformational fluctuation can be considered a linear combination of the essential dynamics yielded by the PCA. Please see [ALB93], [Jol02], [SJS14], or [SS18] for a more in-depth introduction to PCA.

Trajectory coordinates can be transformed onto a lower-dimensional space (essential subspace) constructed from these principal components in order to compare conformations. You can thereby visualise the motion described by that component.

In MDAnalysis, the method implemented in the PCA class (API docs) is as follows:

Optionally align each frame in your trajectory to the first frame.

Construct a 3N x 3N covariance for the N atoms in your trajectory. Optionally, you can provide a mean; otherwise the covariance is to the averaged structure over the trajectory.

Diagonalise the covariance matrix. The eigenvectors are the principal components, and their eigenvalues are the associated variance.

Sort the eigenvalues so that the principal components are ordered by variance.

Note

Principal component analysis algorithms are deterministic, but the solutions are not unique. For example, you could easily change the sign of an eigenvector without altering the PCA. Different algorithms are likely to produce different answers, due to variations in implementation. MDAnalysis may not return the same values as another package.

[3]:

aligner = align.AlignTraj(u, u, select='backbone',

in_memory=True).run()

You can choose how many principal components to save from the analysis with n_components. The default value is None, which saves all of them. You can also pass a mean reference structure to be used in calculating the covariance matrix. With the default value of None, the covariance uses the mean coordinates of the trajectory.

[4]:

pc = pca.PCA(u, select='backbone',

align=True, mean=None,

n_components=None).run()

The principal components are saved in pc.p_components. If you kept all the components, you should have an array of shape \((n_{atoms}\times3, n_{atoms}\times3)\).

[5]:

backbone = u.select_atoms('backbone')

n_bb = len(backbone)

print('There are {} backbone atoms in the analysis'.format(n_bb))

print(pc.p_components.shape)

There are 855 backbone atoms in the analysis

(2565, 2565)

The variance of each principal component is in pc.variance. For example, to get the variance explained by the first principal component to 5 decimal places:

[6]:

print(f"PC1: {pc.variance[0]:.5f}")

PC1: 4203.19053

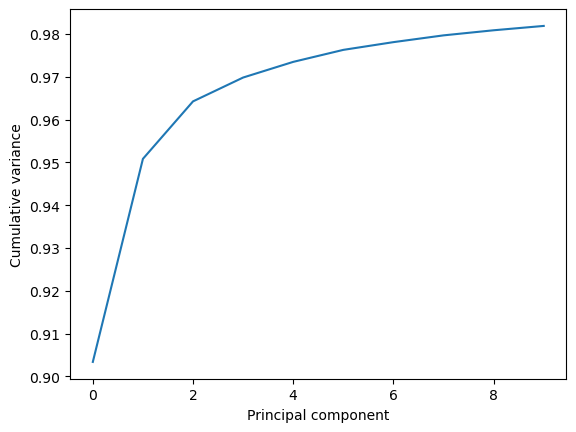

This variance is somewhat meaningless by itself. It is much more intuitive to consider the variance of a principal component as a percentage of the total variance in the data. MDAnalysis also tracks the percentage cumulative variance in pc.cumulated_variance. As shown below, the first principal component contains 90.3% the total trajectory variance. The first three components combined account for 96.4% of the total variance.

[7]:

for i in range(3):

print(f"Cumulated variance: {pc.cumulated_variance[i]:.3f}")

Cumulated variance: 0.903

Cumulated variance: 0.951

Cumulated variance: 0.964

[8]:

plt.plot(pc.cumulated_variance[:10])

plt.xlabel('Principal component')

plt.ylabel('Cumulative variance')

plt.show()

Visualising projections into a reduced dimensional space

The pc.transform() method transforms a given atom group into weights \(\mathbf{w}_i\) over each principal component \(i\).

\(\mathbf{r}(t)\) are the atom group coordinates at time \(t\), \(\mathbf{\overline{r}}\) are the mean coordinates used in the PCA, and \(\mathbf{u}_i\) is the \(i\)th principal component eigenvector \(\mathbf{u}\).

While the given atom group must have the same number of atoms that the principal components were calculated over, it does not have to be the same group.

Again, passing n_components=None will tranform your atom group over every component. Below, we limit the output to projections over 3 principal components only.

[9]:

transformed = pc.transform(backbone, n_components=3)

transformed.shape

[9]:

(98, 3)

The output has the shape (n_frames, n_components). For easier analysis and plotting we can turn the array into a DataFrame.

[10]:

df = pd.DataFrame(transformed,

columns=['PC{}'.format(i+1) for i in range(3)])

df['Time (ps)'] = df.index * u.trajectory.dt

df.head()

[10]:

| PC1 | PC2 | PC3 | Time (ps) | |

|---|---|---|---|---|

| 0 | 118.408413 | 29.088241 | 15.746624 | 0.0 |

| 1 | 115.561879 | 26.786797 | 14.652498 | 1.0 |

| 2 | 112.675616 | 25.038766 | 12.920274 | 2.0 |

| 3 | 110.341467 | 24.306984 | 11.427098 | 3.0 |

| 4 | 107.584302 | 23.464154 | 11.612104 | 4.0 |

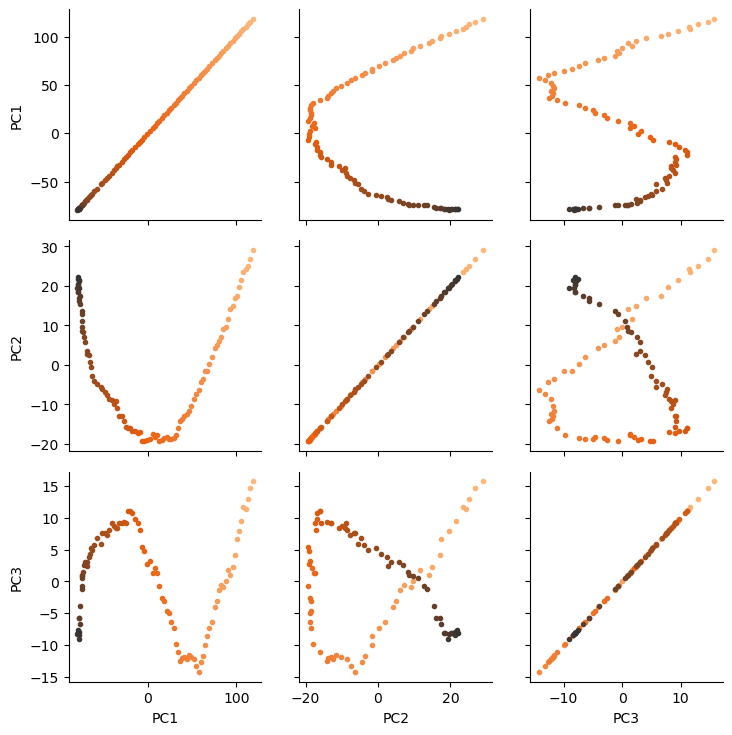

There are several ways we can visualise the data. Using the Seaborn’s PairGrid tool is the quickest and easiest way, if you have seaborn already installed.

Note

You will need to install the data visualisation library Seaborn for this function.

[11]:

import seaborn as sns

g = sns.PairGrid(df, hue='Time (ps)',

palette=sns.color_palette('Oranges_d',

n_colors=len(df)))

g.map(plt.scatter, marker='.')

plt.show()

Another way to investigate the essential motions of the trajectory is to project the original trajectory onto each of the principal components, to visualise the motion of the principal component. The outer product \(\otimes\) of the weights \(\mathbf{w}_i(t)\) for principal component \(i\) with the eigenvector \(\mathbf{u}_i\) describes fluctuations around the mean on that axis, so the projected trajectory \(\mathbf{r}_i(t)\) is simply the fluctuations added onto the mean positions \(\mathbf{\overline{r}}\).

Below, we generate the projected coordinates of the first principal component. The mean positions are stored at pc.mean.

[12]:

pc1 = pc.p_components[:, 0]

trans1 = transformed[:, 0]

projected = np.outer(trans1, pc1) + pc.mean.flatten()

coordinates = projected.reshape(len(trans1), -1, 3)

We can create a new universe from this to visualise the movement over the first principal component.

[13]:

proj1 = mda.Merge(backbone)

proj1.load_new(coordinates, order="fac")

[13]:

<Universe with 855 atoms>

[14]:

# view = nv.show_mdanalysis(proj1.atoms)

# view

If you have nglview installed, you can view the trajectory in the notebook. Otherwise, you can write the trajectory out to a file and use another program such as VMD. Below, we create a movie of the component.

[15]:

# from nglview.contrib.movie import MovieMaker

# movie = MovieMaker(

# view,

# step=4, # keep every 4th frame

# output='pc1.gif',

# render_params={"factor": 3}, # set to 4 for highest quality

# )

# movie.make()

Measuring convergence with cosine content

The essential modes of a trajectory usually describe global, collective motions. The cosine content of a principal component can be interpreted to determine whether proteins are transitioning between conformational states. However, random diffusion can also appear to produce collective motion. The cosine content can measure the convergence of a trajectory and indicate poor sampling.

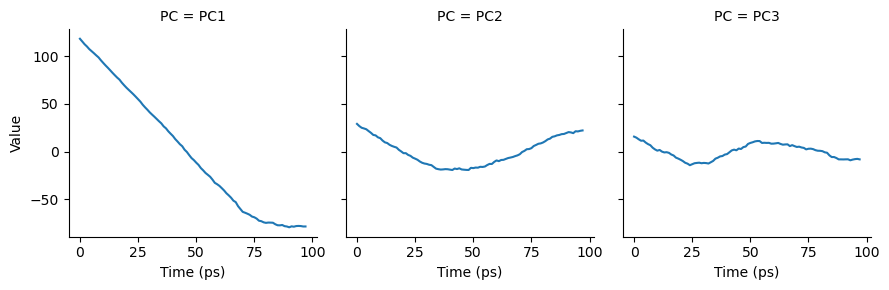

The cosine content of a principal component measures how similar it is to a cosine shape. Values range from 0 (no similarity to a cosine) and 1 (a perfect cosine shape). If the values of the first few principal components are close to 1, this can indicate poor sampling, as the motion of the particles may not be distinguished from random diffusion. Values below 0.7 do not indicate poor sampling.

For more information, please see [MLS09].

Note

[Hes02] first published the usage of cosine content to evaluate sampling. Please cite this paper when using the MDAnalysis.analysis.pca.cosine_content method in published work.

Below we calculate the cosine content of the first five principal components in the transformed subspace. Note that this is an example only, to dmonstrate how to use the method; the first few principal components of short simulations always represent random diffusion ([Hes02]).

[16]:

for i in range(3):

cc = pca.cosine_content(transformed, i)

print(f"Cosine content for PC {i+1} = {cc:.3f}")

Cosine content for PC 1 = 0.960

Cosine content for PC 2 = 0.906

Cosine content for PC 3 = 0.723

As can be seen, the cosine content of each component is quite high. If we plot the transformed components over time, we can see that each component does resemble a cosine curve.

[17]:

# melt the dataframe into a tidy format

melted = pd.melt(df, id_vars=["Time (ps)"],

var_name="PC",

value_name="Value")

g = sns.FacetGrid(melted, col="PC")

g.map(sns.lineplot,

"Time (ps)", # x-axis

"Value", # y-axis

ci=None) # no confidence interval

plt.show()

References

[1] Andrea Amadei, Antonius B. M. Linssen, and Herman J. C. Berendsen. Essential dynamics of proteins. Proteins: Structure, Function, and Bioinformatics, 17(4):412–425, 1993. _eprint: https://onlinelibrary.wiley.com/doi/pdf/10.1002/prot.340170408. URL: http://onlinelibrary.wiley.com/doi/abs/10.1002/prot.340170408, doi:https://doi.org/10.1002/prot.340170408.

[2] Oliver Beckstein, Elizabeth J. Denning, Juan R. Perilla, and Thomas B. Woolf. Zipping and Unzipping of Adenylate Kinase: Atomistic Insights into the Ensemble of Open↔Closed Transitions. Journal of Molecular Biology, 394(1):160–176, November 2009.

URL: https://linkinghub.elsevier.com/retrieve/pii/S0022283609011164, doi:10.1016/j.jmb.2009.09.009.

[3] Richard J. Gowers, Max Linke, Jonathan Barnoud, Tyler J. E. Reddy, Manuel N. Melo, Sean L. Seyler, Jan Domański, David L. Dotson, Sébastien Buchoux, Ian M. Kenney, and Oliver Beckstein. MDAnalysis: A Python Package for the Rapid Analysis of Molecular Dynamics Simulations. Proceedings of the 15th Python in Science Conference, pages 98–105, 2016.

URL: https://conference.scipy.org/proceedings/scipy2016/oliver_beckstein.html, doi:10.25080/Majora-629e541a-00e.

[4] I. T. Jolliffe. Principal Component Analysis. Springer Series in Statistics. Springer-Verlag, New York, 2 edition, 2002. ISBN 978-0-387-95442-4. URL: http://www.springer.com/gp/book/9780387954424, doi:10.1007/b98835.

[5] Naveen Michaud-Agrawal, Elizabeth J. Denning, Thomas B. Woolf, and Oliver Beckstein. MDAnalysis: A toolkit for the analysis of molecular dynamics simulations. Journal of Computational Chemistry, 32(10):2319–2327, July 2011.

URL: http://doi.wiley.com/10.1002/jcc.21787, doi:10.1002/jcc.21787.

[6] Florian Sittel, Abhinav Jain, and Gerhard Stock. Principal component analysis of molecular dynamics: on the use of Cartesian vs. internal coordinates. The Journal of Chemical Physics, 141(1):014111, July 2014. doi:10.1063/1.4885338.

[7] Florian Sittel and Gerhard Stock. Perspective: Identification of collective variables and metastable states of protein dynamics. The Journal of Chemical Physics, 149(15):150901, October 2018. Publisher: American Institute of Physics. URL: http://aip.scitation.org/doi/10.1063/1.5049637, doi:10.1063/1.5049637.